https://github.com/fuergaosi233/claude-code-proxy

上文用的litellm 配置豆包 1.6 使用claude code, 实际体验并不好。

新找了个开源项目效果还不错。

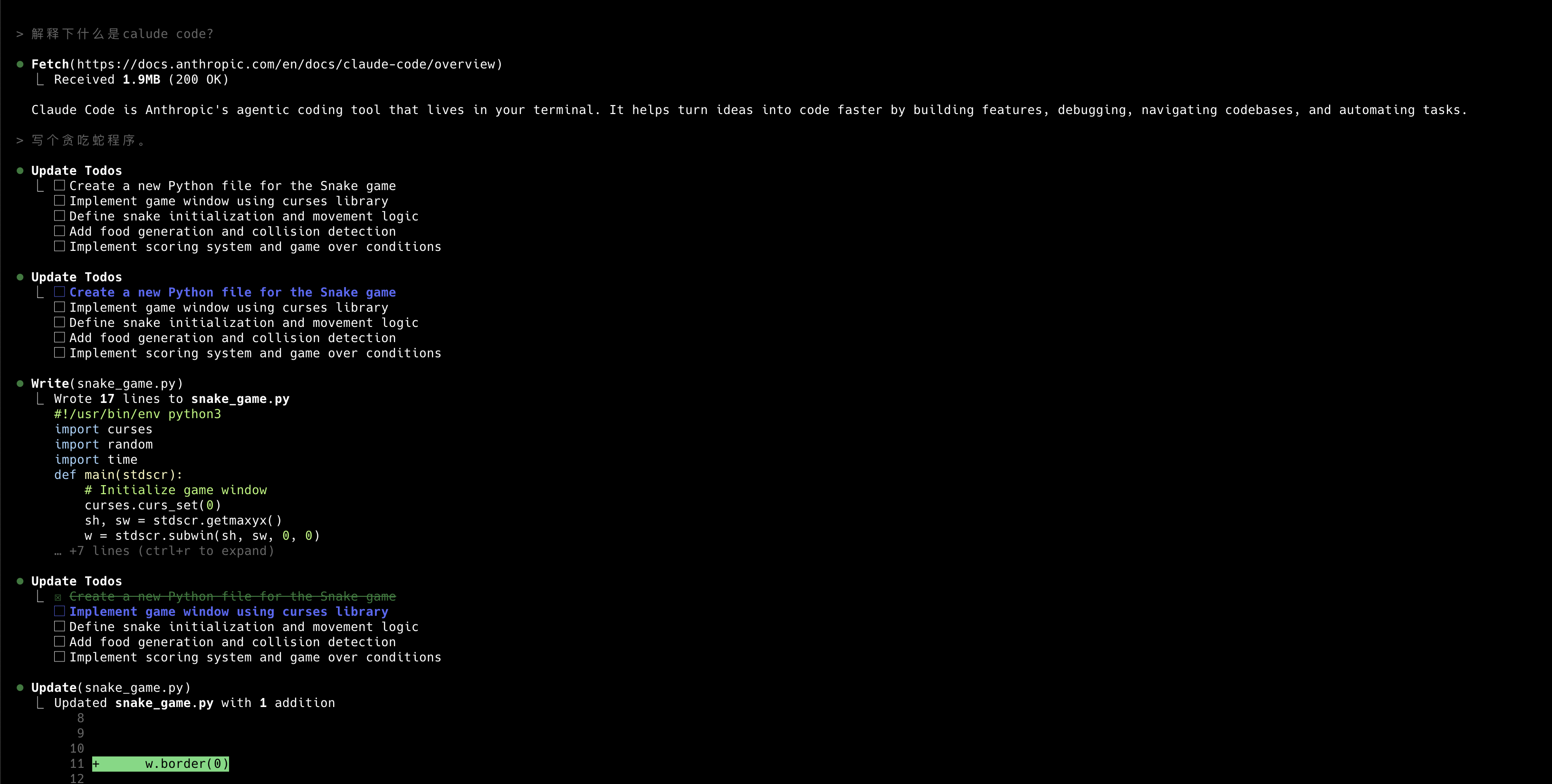

截图如下:

豆包配置:

cat .env

# Required: Your OpenAI API key

OPENAI_API_KEY="豆包的key"

# Optional: OpenAI API base URL (default: https://api.openai.com/v1)

# You can change this to use other providers like Azure OpenAI, local models, etc.

OPENAI_BASE_URL="https://ark.cn-beijing.volces.com/api/v3"

# Optional: Model mappings (BIG and SMALL models)

BIG_MODEL="doubao-seed-1-6-250615"

# Used for Claude sonnet/opus requests

SMALL_MODEL="doubao-seed-1-6-250615"

# Used for Claude haiku requests

# Optional: Server settings

HOST="0.0.0.0"

PORT="8082"

LOG_LEVEL="INFO"

# DEBUG, INFO, WARNING, ERROR, CRITICAL

# Optional: Performance settings

MAX_TOKENS_LIMIT="4096"

# Minimum tokens limit for requests (to avoid errors with thinking model)

MIN_TOKENS_LIMIT="4096"

REQUEST_TIMEOUT="90"

MAX_RETRIES="2"